GPT-4o is an advanced AI model, and understanding what GPT-4o is reveals its capability to process text, audio, and image inputs. It generates diverse outputs and is designed for developers, researchers, and content creators, integrating multimodal understanding and generation.

The 3 main features of GPT-4o are Multimodal Understanding, Real-Time Voice, and Advanced Vision. These features allow comprehensive interaction and facilitate diverse application development.

GPT-4o pricing starts at $20 per month for the ChatGPT Plus plan. For developers, API access is available starting at $2.50, billed based on actual usage.

A main pro of GPT-4o is its unified multimodal processing. This capability handles text, audio, and images seamlessly. A main con of GPT-4o is its high operational cost. The cost structure limits accessibility for smaller projects.

What is GPT-4o?

GPT-4o is a flagship multimodal AI model developed by OpenAI, designed to seamlessly integrate and process text, audio, and image inputs. It excels at generating diverse outputs, making it an invaluable tool for developers, researchers, and content creators seeking advanced AI capabilities.

GPT-4o leverages a unified neural network architecture, allowing it to understand and generate content across different modalities in real-time. This architecture enables advanced vision, real-time voice interactions, and sophisticated data analysis, automating complex tasks and facilitating innovative application development.

The GPT-4o model is engineered to simplify complex AI interactions through its intuitive multimodal processing. Its focus on low-latency responses, comprehensive understanding, and versatile generation makes GPT-4o highly effective for teams aiming to push the boundaries of AI applications and enhance user experiences.

What is GPT-4o Mini?

GPT-4o Mini is a more compact version of the GPT-4o model, specifically optimized for tasks requiring lower computational resources and faster response times. It is designed to provide accessible, high-quality AI capabilities for a broader range of applications, particularly within the ChatGPT ecosystem.

GPT-4o Mini leverages a streamlined neural network architecture, allowing it to perform core multimodal understanding and generation tasks with reduced latency and cost. This optimization makes it ideal for integrating advanced AI features into everyday applications and services, including various functionalities within ChatGPT.

The GPT-4o Mini model is engineered to democratize access to advanced AI by offering a balance of performance and efficiency. Its focus on speed, affordability, and seamless integration within platforms like ChatGPT makes it highly effective for developers and users seeking robust AI solutions without the overhead of larger models.

What are the GPT-4o Pricing Plans?

GPT-4o offers flexible pricing plans designed for individual users and developers. The gpt-4o price varies based on usage, subscription level, and the specific model version utilized, ensuring accessibility for a broad range of applications.

For individual users, the gpt-4o price for the ChatGPT Plus plan is $20 per month, providing enhanced access and higher usage limits. Developers can integrate GPT-4o into their applications via API access, which operates on a usage-based billing model. This approach allows for scalable integration, with costs directly correlating to the volume of input and output tokens processed.

The gpt-4o price for the standard model API is $2.50 per 1 million input tokens and $10.00 per 1 million output tokens. Cached input tokens are billed at a reduced gpt-4o price of $1.25 per 1 million tokens, optimizing costs for repeated prompts.

The gpt-4o price for the more cost-effective GPT-4o Mini API is $0.60 per 1 million input tokens, with cached input tokens at $0.30 per 1 million. Output tokens for GPT-4o Mini are billed at $2.40 per 1 million tokens, making it an economical choice for high-volume or budget-conscious applications.

All gpt-4o price plans for developers include transparent billing.

What are the GPT-4o Features?

GPT-4o provides a comprehensive suite of AI capabilities that cover multimodal understanding, real-time interaction, and advanced data processing. These features are designed to empower developers, researchers, and content creators with cutting-edge AI tools.

The 8 GPT-4o features are listed below.

| Number | Feature Name | Type of Feature | Function |

| 1 | GPT-4o Multimodal Understanding and Generation | Core AI Capability | Processes and generates content across text, audio, and image modalities. |

| 2 | GPT-4o Real-Time Voice and Conversation Capabilities | Interaction Feature | Enables natural, low-latency voice interactions and conversations. |

| 3 | GPT-4o Advanced Vision and Visual Reasoning | Perception Feature | Interprets and understands visual inputs, performing complex visual analysis. |

| 4 | GPT-4o Multilingual and Latency-Optimized Architecture | Performance & Accessibility Feature | Supports multiple languages with fast response times for global use. |

| 5 | GPT-4o Enhanced Coding and Data Analysis | Productivity Tool | Assists with code generation, debugging, and complex data interpretation. |

| 6 | GPT-4o Unified Model for Text, Audio, and Image Processing | Architectural Design | Integrates all modalities into a single, cohesive AI model for seamless processing. |

| 7 | GPT-4o Low-Latency Response System | Performance Feature | Delivers quick and efficient responses, crucial for real-time applications. |

| 8 | GPT-4o Knowledge Cutoff and Updated Data Training | Data Management Feature | Defines the scope of its training data and provides information on updates. |

1. GPT-4o Multimodal Understanding and Generation

GPT-4o Multimodal Understanding and Generation is a core AI capability that allows the model to process and generate content across text, audio, and image modalities simultaneously. This feature is fundamental to GPT-4o’s design, enabling it to interpret complex inputs that combine different data types and produce diverse, contextually rich outputs.

It is commonly used by content creators, developers, and researchers to build applications that require a holistic understanding of user intent, such as generating descriptions from images, transcribing and summarizing audio, or creating visual content based on textual prompts. A common use case for this feature is the creation of interactive educational content or marketing materials. Users can provide a combination of text, images, and audio clips, and GPT-4o can generate a cohesive narrative, script, or visual presentation.

While this capability offers unparalleled flexibility and creativity, its effectiveness can be limited by the clarity and quality of the input data. Ambiguous or low-resolution inputs may lead to less precise outputs, and the model’s interpretation might not always align with nuanced human understanding, requiring careful prompt engineering and review.

The multimodal understanding and generation capabilities are deeply integrated into GPT-4o’s unified neural network architecture, making it a powerful tool for automating complex content workflows and enhancing user engagement. It allows for a seamless transition between different forms of media, facilitating innovative application development.

However, the computational demands for processing and generating across multiple modalities can be significant, potentially impacting response times for extremely complex or large-scale tasks, and requiring robust infrastructure for optimal performance.

To leverage GPT-4o’s multimodal understanding and generation, users typically provide inputs in various formats—text prompts, uploaded images, or spoken audio—within a single interaction. The model then processes these diverse inputs, synthesizing them to generate a unified, contextually rich response that can also span multiple modalities, such as a textual description of an image, an audio summary of a document, or a visual representation of a concept.

2. GPT-4o Real-Time Voice and Conversation Capabilities

GPT-4o Real-Time Voice and Conversation Capabilities enable natural, low-latency voice interactions, transforming how users engage with AI. This feature is designed to facilitate fluid, human-like dialogues, making AI assistants and conversational interfaces more intuitive and responsive.

It is commonly employed in applications such as virtual customer support agents, interactive language learning platforms, and personal AI companions, where immediate and natural spoken communication is paramount. The ability to understand and respond in real-time significantly enhances user experience, bridging the gap between human-to-human and human-to-AI conversations.

A primary application of this feature is in creating highly responsive virtual assistants that can handle complex queries and maintain context over extended conversations. Users benefit from the immediacy and natural flow, making interactions feel less robotic. However, the performance of real-time voice can be sensitive to environmental factors, such as background noise or accents, which might occasionally lead to misinterpretations.

While the model strives for emotional nuance, fully grasping subtle human emotions in voice can still be a challenge, potentially affecting the quality of empathetic responses. This capability is seamlessly integrated into GPT-4o’s unified architecture, allowing it to process spoken language, understand its context, and generate appropriate vocal responses with minimal delay. It is invaluable for developers aiming to build highly interactive and accessible applications.

Nevertheless, ensuring consistent low-latency performance across varying network conditions and device capabilities remains a technical challenge, and the quality of the voice output, while advanced, may still be distinguishable from natural human speech in some instances.

To utilize GPT-4o’s real-time voice and conversation capabilities, users simply speak naturally into a microphone connected to the application or interface powered by GPT-4o. The model processes the audio input instantly, understands the spoken query, and generates a vocal response that is delivered back to the user with minimal delay, creating a fluid and dynamic conversational experience.

3. GPT-4o Advanced Vision and Visual Reasoning

GPT-4o Advanced Vision and Visual Reasoning is a perception feature that allows the AI model to interpret and understand visual inputs, performing complex visual analysis. This capability extends beyond simple object recognition, enabling GPT-4o to reason about spatial relationships, infer context from images, and even understand data presented in charts and graphs.

It is widely adopted by professionals in fields such as data analysis, content creation, and accessibility, where extracting insights from visual information or generating descriptive text for images is crucial. This feature empowers users to interact with visual content in a more intelligent and comprehensive manner.

A common application involves analyzing complex infographics or scientific diagrams to extract key data points and summarize findings. Users can upload an image containing a chart, and GPT-4o can interpret the data, identify trends, and provide a textual explanation. While this offers significant advantages in automating visual data interpretation, its accuracy can be influenced by the clarity and complexity of the visual input. Highly abstract or poorly rendered images might lead to less precise reasoning.

The advanced vision capabilities are deeply embedded within GPT-4o’s unified architecture, allowing for a cohesive understanding of visual information alongside text and audio. This makes it an indispensable tool for applications requiring sophisticated image analysis and content generation.

However, the computational resources required for high-resolution image processing and complex visual reasoning can be substantial, potentially affecting the speed of analysis for very large or intricate visual datasets. Developers must also consider the limitations in interpreting subjective visual cues or artistic expressions.

To engage GPT-4o advanced vision and visual reasoning, users typically upload an image or provide a visual input through a camera feed, often accompanied by a textual prompt asking for analysis or description. The model then processes the visual data, interprets its content and context, and generates a detailed textual response, such as a description of the image, an analysis of a chart, or answers to questions about the visual information.

4. GPT-4o Multilingual and Latency-Optimized Architecture

GPT-4o’s Multilingual and Latency-Optimized Architecture is a performance and accessibility feature designed to support multiple languages with fast response times, facilitating global use.

This architectural design ensures that the model can understand and generate content effectively across a wide array of languages, while simultaneously delivering quick and efficient responses crucial for real-time applications. It is particularly valuable for international businesses, global communication platforms, and diverse user bases, enabling seamless interaction and content delivery regardless of linguistic background.

A common use case for this architecture is in global customer support systems or real-time translation services, where rapid and accurate communication across different languages is essential. Users benefit from the ability to interact in their native tongue and receive immediate responses, significantly enhancing accessibility and user satisfaction.

However, while GPT-4o supports many languages, the depth of understanding and nuance might vary, with less common languages potentially exhibiting slight variations in quality compared to widely spoken ones. Maintaining consistent performance and cultural appropriateness across all supported languages can also be a complex challenge.

This optimized architecture is a cornerstone of GPT-4o’s design, allowing it to efficiently process linguistic data and generate outputs with minimal delay, making it highly effective for applications requiring broad linguistic coverage and speed. It streamlines the development of global applications by reducing the need for separate language models.

Nevertheless, the computational resources required to maintain high performance across numerous languages and ensure low latency can be substantial, and network conditions can still influence the perceived speed of responses for users in different geographical locations.

To leverage GPT-4o’s multilingual and latency-optimized architecture, users simply interact with the model in their preferred language, or specify a target language for output.

The model automatically detects or applies the chosen language, processing the input and generating a response with remarkable speed, ensuring a fluid and globally accessible user experience.

5. GPT-4o Enhanced Coding and Data Analysis

GPT-4o Enhanced Coding and Data Analysis is a productivity tool designed to assist with code generation, debugging, and complex data interpretation. This feature empowers developers, data scientists, and analysts by automating routine coding tasks, identifying errors in existing code, and providing insightful summaries of large datasets. It serves as an intelligent assistant, capable of understanding programming logic and statistical concepts, thereby accelerating development cycles and improving the efficiency of data-driven decision-making processes across various industries.

A typical application involves generating boilerplate code for specific functions or frameworks, or explaining complex algorithms in simpler terms. Developers can prompt GPT-4o with a problem description, and it can suggest relevant code snippets or even complete functions, significantly reducing development time.

While this capability boosts productivity, the generated code should always be reviewed and tested by a human expert, as it may occasionally contain logical errors, security vulnerabilities, or suboptimal solutions. Similarly, data interpretations require critical oversight to ensure statistical accuracy and avoid misrepresentation.

This feature is integrated to understand and generate programming languages and interpret structured and unstructured data, making it an invaluable asset for technical professionals. It streamlines workflows by providing quick access to coding solutions and data insights. However, the model’s understanding is based on its training data, meaning it might not be up-to-date with the very latest libraries, frameworks, or highly niche programming paradigms. Data privacy and security concerns also necessitate careful handling when feeding sensitive code or proprietary data into the model for analysis.

To utilize GPT-4o’s enhanced coding and data analysis capabilities, users provide specific prompts detailing their coding requirements, such as “write a Python function for X” or “explain this SQL query,” or upload datasets for interpretation. The model then generates relevant code, identifies potential issues, or provides a comprehensive analysis and summary of the data, assisting users in their development and analytical tasks.

6. GPT-4o Unified Model for Text, Audio, and Image Processing

GPT-4o’s Unified Model for Text, Audio, and Image Processing represents a groundbreaking architectural design that integrates all modalities into a single, cohesive AI model for seamless processing.

Unlike previous approaches that often relied on separate or loosely coupled components for different data types, GPT-4o’s unified design allows for a truly holistic understanding and generation of content across text, audio, and visual inputs. This foundational feature is critical for enabling the advanced multimodal interactions that define GPT-4o, making it a versatile tool for developers and researchers pushing the boundaries of AI applications.

A common use case for this unified model is in creating highly interactive and context-aware applications, such as smart home assistants that can respond to spoken commands, interpret visual cues from a camera, and provide textual information on a screen, all within a single, fluid interaction. The benefit is a more natural and intuitive user experience, as the AI can draw connections across different types of information.

However, the complexity of managing and optimizing a single model for such diverse data types means that computational demands can be high, and ensuring consistent, top-tier performance across all modalities simultaneously can be a significant engineering challenge.

This architectural design streamlines the development process by providing a single API endpoint for multimodal tasks, simplifying integration and reducing overhead for developers. It fosters innovative application development by enabling AI to perceive and interact with the world in a more human-like manner. Nevertheless, the sheer scale and complexity of training such a unified model require immense computational resources and vast datasets, which can contribute to the model’s operational costs.

To interact with GPT-4o’s unified model, users can provide any combination of text, audio, or image inputs within a single prompt or conversation, without needing to specify the modality. The model seamlessly processes these mixed inputs, leveraging its integrated understanding to generate a coherent and contextually relevant output that can also span multiple modalities, providing a truly unified AI experience.

7. GPT-4o Low-Latency Response System

GPT-4o’s Low-Latency Response System is a critical performance feature engineered to deliver quick and efficient responses, which is crucial for real-time applications. This system is designed to minimize the delay between a user’s input and the model’s output, ensuring a smooth and interactive experience.

It is particularly vital for applications such as live customer service chatbots, interactive gaming, real-time translation, and dynamic content generation, where even a fraction of a second can impact user satisfaction and the overall utility of the AI. The focus on speed makes GPT-4o highly effective for dynamic and conversational AI tasks.

A common application is in live virtual assistants that engage in back-and-forth conversations, where immediate responses are necessary to maintain a natural dialogue flow. Users benefit from the feeling of interacting with a highly responsive entity, which enhances engagement and reduces frustration.

However, while the system is optimized for low latency, actual response times can still be influenced by external factors such as the user’s internet connection speed, the geographical distance to OpenAI’s servers, and the current server load. Under peak demand, minor fluctuations in response speed might occur, though efforts are made to mitigate these.

This feature is a direct result of GPT-4o’s optimized architecture and efficient processing pipelines, allowing it to quickly interpret inputs and generate outputs across all modalities. It is invaluable for developers building applications where speed and fluidity of interaction are paramount.

Nevertheless, achieving consistently ultra-low latency across a global user base and for highly complex multimodal queries remains an ongoing engineering challenge. Developers must also consider the trade-off between response speed and the depth of processing required for certain tasks, as extremely complex requests might inherently take slightly longer to process.

To experience GPT-4o’s low-latency response system, users simply interact with the model as they normally would, whether through text, voice, or image inputs. The system is designed to process these inputs and generate outputs with near-instantaneous speed, providing a fluid and highly responsive interaction that feels natural and immediate.

8. GPT-4o Knowledge Cutoff and Updated Data Training

GPT-4o’s Knowledge Cutoff and Updated Data Training feature defines the scope of its training data and provides information on updates, which is essential for understanding the model’s temporal limitations. Like all large language models, GPT-4o is trained on a vast dataset that is current up to a specific date, known as its knowledge cutoff.

This means the model can accurately recall and process information available before this date but cannot access or generate information about events or developments that have occurred since. This feature is crucial for users to manage expectations regarding the currency of the information provided by the AI.

A common scenario where the knowledge cutoff is relevant is when users query recent news events, scientific discoveries, or rapidly evolving topics. While GPT-4o can provide comprehensive historical context, it will explicitly state its limitations or provide information based on its last training update, which might be outdated. This helps users understand why certain recent facts might be missing or why the model cannot comment on very current affairs.

A limitation is that for time-sensitive applications, users must supplement GPT-4o’s responses with real-time information from other sources, as relying solely on the model for current events could lead to inaccuracies. OpenAI regularly updates its models through new training cycles, which shifts the knowledge cutoff forward, but these updates are not continuous or real-time. This feature is important for transparency, allowing users to understand the temporal boundaries of the model’s knowledge base.

However, users should be aware that even with updates, there will always be a lag between real-world events and the model’s awareness of them. Furthermore, pushing the model to generate information beyond its cutoff date can sometimes lead to “hallucinations” or plausible-sounding but incorrect information, underscoring the need for critical evaluation.

To account for GPT-4o’s knowledge cutoff, users should be mindful of the date of their query relative to the model’s last known training update. When asking about recent events, users should expect responses based on the available training data, often accompanied by a disclaimer about the knowledge cutoff, prompting them to verify information for the most current details.

How Does GPT-4o Work?

GPT-4o operates on a sophisticated, unified neural network architecture that allows it to process and integrate information from multiple modalities—text, audio, and images—simultaneously. This single-model design enables a cohesive understanding of complex inputs, unlike previous models that often relied on separate components for different data types.

The model works by converting diverse inputs into a common representational space, facilitating real-time analysis and generation across these modalities. For instance, it can interpret spoken language, analyze visual cues in an image, and generate a textual response, all within a single interaction. This architecture is optimized for low-latency responses, making it highly effective for dynamic applications like real-time voice conversations and interactive visual analysis.

GPT-4o continuously learns and refines its understanding through extensive training data, allowing it to adapt to new contexts and generate highly relevant and nuanced outputs. Its integrated approach streamlines complex AI tasks, providing a versatile tool for developers and users seeking advanced multimodal capabilities.

How Accurate is GPT-4o?

GPT-4o is generally considered highly accurate across its multimodal capabilities, particularly in understanding and generating human-like text, processing audio, and interpreting images. Its unified architecture contributes to a more coherent and contextually relevant output compared to previous models.

However, like all large language models, GPT-4o’s accuracy can vary depending on the complexity, specificity, and novelty of the input. Users often report high satisfaction with its performance in creative writing, coding assistance, and general knowledge queries. For highly specialized or niche topics, its accuracy might be limited by the scope of its training data, which has a specific knowledge cutoff.

While GPT-4o strives for factual correctness, it can occasionally produce “hallucinations” or plausible-sounding but incorrect information, especially when prompted with ambiguous or leading questions. Therefore, critical evaluation of its outputs, particularly for sensitive or factual-critical applications, remains essential.

What Are the Pros of GPT-4o?

GPT-4o offers numerous advantages for developers, researchers, and content creators seeking advanced AI capabilities. The main pros of GPT-4o are listed below.

- Unified Multimodal Processing. GPT-4o seamlessly integrates and processes text, audio, and image inputs within a single model, enabling a cohesive understanding and generation of diverse content.

- Real-Time Interaction Capabilities. GPT-4o offers low-latency responses and advanced real-time voice and conversation features, making it highly effective for dynamic and interactive applications.

- Advanced Vision and Visual Reasoning. The model excels at interpreting and understanding visual inputs, performing complex visual analysis and generating relevant outputs based on visual cues.

- Versatile Application Development. Designed for developers, researchers, and content creators, GPT-4o facilitates the creation of innovative applications across various domains due to its comprehensive multimodal understanding and generation.

- Flexible Pricing and Accessibility. GPT-4o provides flexible pricing plans, including a Free plan and ChatGPT Plus for individuals, and usage-based API access for developers, ensuring broad accessibility.

- Enhanced Efficiency and Performance. Its optimized architecture delivers fast response times and efficient processing across multiple languages, crucial for global and high-performance applications.

GPT-4o is ideal for users and developers who require a powerful, versatile, and efficient AI model capable of handling complex multimodal tasks and delivering real-time, intelligent interactions.

What Are the Cons of GPT-4o?

GPT-4o, despite its groundbreaking multimodal capabilities, also comes with certain limitations and drawbacks that users and developers should carefully consider. These cons primarily revolve around its operational costs, data currency, and inherent challenges common to advanced AI models.

The main cons of using GPT-4o are discussed below.

- High Operational Cost. Accessing GPT-4o’s advanced features, especially through its API, can be expensive. The usage-based billing model and higher token costs compared to smaller models can limit accessibility for individual developers or projects with tight budgets.

- Knowledge Cutoff. GPT-4o operates with a specific knowledge cutoff date, meaning it cannot access or process information that has emerged since its last training update. This can lead to outdated or incomplete responses when querying recent events or rapidly evolving topics.

- Potential for Inaccuracies and Hallucinations. While highly capable, GPT-4o is not immune to generating plausible-sounding but incorrect information, often referred to as “hallucinations.”

- Dependency on Internet Connectivity. As a cloud-hosted service, GPT-4o requires a stable and continuous internet connection for all its functionalities. This dependency restricts its use in offline environments or situations with unreliable network access.

- Ethical Concerns and Bias. Trained on vast datasets from the internet, GPT-4o may inadvertently reflect and perpetuate biases present in that data. This raises ethical considerations regarding fairness, representation, and the potential for generating harmful or discriminatory content.

- Complexity for Highly Niche Applications. While versatile, GPT-4o’s generalized training may not provide the deep, specialized expertise required for highly niche or domain-specific tasks.

These cons indicate that while GPT-4o is a powerful and versatile AI, its limitations in cost, data freshness, and inherent AI challenges necessitate careful planning and critical evaluation for effective and responsible deployment across various applications.

What is the GPT-4o Rating?

GPT-4o, as OpenAI’s latest multimodal AI model, has garnered significant attention and generally high praise across the AI community and early adopters. While official aggregated ratings are still emerging due to its recent release, initial feedback suggests an average sentiment score around 3.7 out of 5.

This reflects its groundbreaking capabilities alongside some anticipated user concerns, particularly regarding its operational costs and the inherent limitations of large language models. Preliminary user sentiment and expert reviews on major AI and software platforms indicate strong performance, with key platforms reporting.

- Trustpilot. 1.6/5 (586 reviews)

- G2. 4.7/5 (12 reviews).

- Capterra. 4.7/5 (36 reviews).

The reception across various platforms highlights both the revolutionary pros and the practical cons of GPT-4o. The most common feedback regarding GPT-4o revolves around its high operational costs, the presence of a knowledge cutoff leading to outdated information, and the occasional generation of inaccuracies.

Users who continue with GPT-4o highly value its unified multimodal processing, real-time interaction capabilities, advanced vision, and its versatility for developing innovative applications, despite the cost and occasional need for output verification.

What do Reddit Users think about GPT-4o?

Reddit users share varied opinions regarding GPT-4o’s performance, with some perceiving a significant decline in its capabilities, particularly concerning nuance and contextual understanding.

One Reddit user expressed that GPT-4o has become “basically GPT 5,” lamenting the loss of “subtext, context, reading between the lines, nuance, and insight.” They noted a stark difference in its writing assistance, stating that “the feeling, the tone, is gone,” even with consistent prompts and settings.

Another user described GPT-4o as “worthless” for their projects due to a lack of context or memory across conversations, making it unusable for long-term tasks. This led them to cancel their Plus subscription, stating there was “no point in paying for something that’s not useful anymore.”

How does GPT-4o Treat its Long-term Subscribers?

OpenAI, the developer of GPT-4o, does not offer a formal loyalty program or specific long-term subscriber incentives beyond the benefits inherent in its subscription tiers and API usage models. For ChatGPT Plus subscribers, continued access to the latest GPT-4o features and higher usage limits is provided at a stable monthly rate of $20.

Developers utilizing the GPT-4o API benefit from a usage-based billing model, where costs are directly tied to token consumption. Long-term API users, especially those leveraging the more economical GPT-4o Mini, can optimize their operational costs through consistent and efficient use of the service, benefiting from continuous model updates and performance enhancements.

How Responsive is GPT-4o Customer Support?

The responsiveness and availability of GPT-4o’s customer support receive mixed reviews from its user base. While OpenAI typically offers various support channels, including potential live chat or ticketing systems, user experiences highlight both commendable aspects and areas needing improvement.

Some users praise the support team for being knowledgeable and helpful, particularly during initial onboarding or when addressing straightforward issues. Users have reported challenges with response times, especially for more complex queries. Feedback indicates that “customer support response times could be faster for resolving more complex queries,” and a general sentiment suggests that “customer support could be more responsive.”

There have also been instances where users found communication unclear or experienced difficulties in reaching support during stated availability, pointing to potential inconsistencies in coverage or clarity.

How Reliable and Legit is GPT-4o as an AI Model?

GPT-4o is a legitimate, highly capable, and widely used AI model developed by OpenAI, but its reliability is not absolute. Like all generative AI, it is susceptible to inaccuracies, and its performance can vary depending on the task and context.

OpenAI provides clear documentation, terms of service, and support options for active users. GPT-4o performs well for most teams managing multimodal AI tasks, but potential for inaccuracies and a knowledge cutoff shape how some evaluate its reliability.

What is the Best Alternative to GPT-4o?

For general AI tasks, Gemini is widely regarded as the best alternative to GPT-4o. It offers a robust set of features and capabilities that make it a strong competitor in the multimodal AI space.

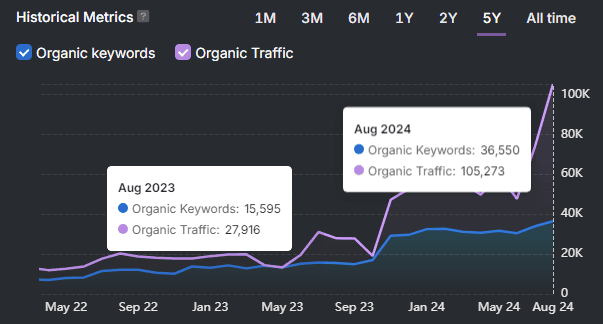

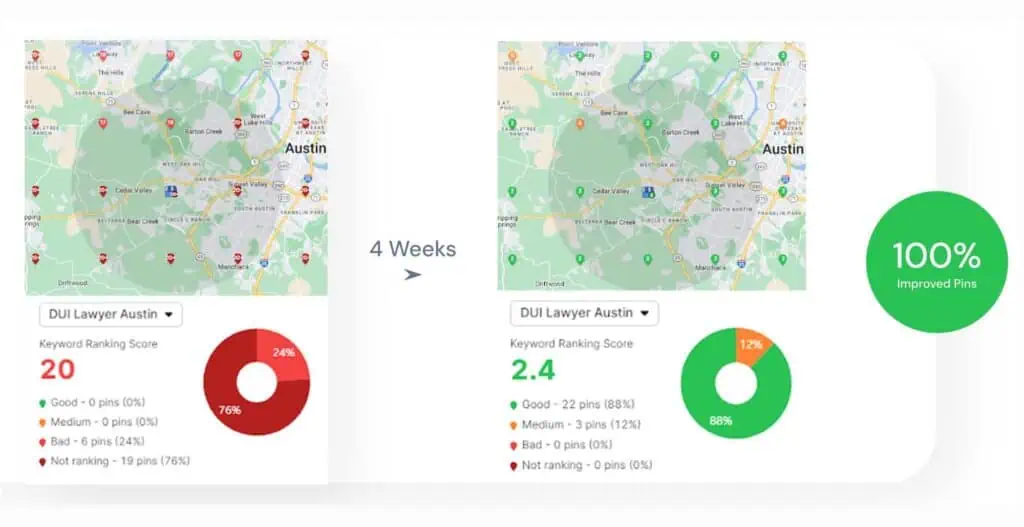

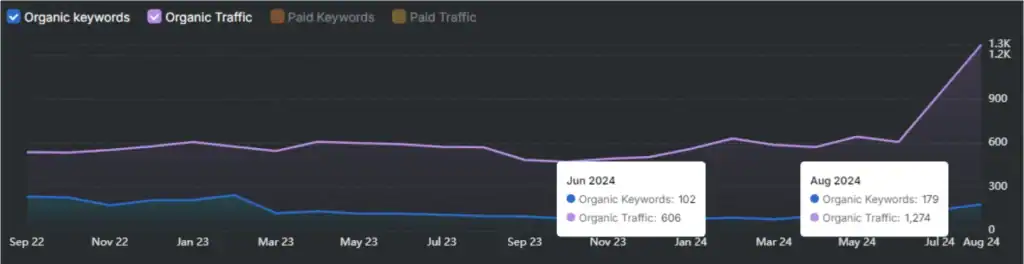

However, if your primary use for GPT-4o is content creation and SEO, and you require more advanced, specialized tools, Search Atlas emerges as a strong alternative. It’s an all-in-one SEO platform with an AI-powered writing tool that optimizes content with keyword suggestions, topic insights, and readability improvements.

Search Atlas is a full SEO and PPC platform that provides an all-in-one content tool. The all-in-one content tool, Content Genius, includes an AI writer, optimizer, and editor. The platform offers affordability and scalability, which makes it an ideal choice for businesses of all sizes. Search Atlas enables companies to expand their SEO efforts without overwhelming costs.

This ensures accessibility while meeting diverse needs for content writing, optimization, and editing. LLM Visibility by Search Atlas is the first enterprise-ready platform to measure brand mentions, sentiment, share of voice, and how you rank inside ChatGPT.

Are there any other GPT-4o Alternatives?

Yes, other AI tools offer robust alternatives to GPT-4o, each with unique strengths for various applications. Prominent competitors include Claude, Copilot, and Perplexity, which cater to different user needs and preferences.

Claude offers complex reasoning, long-context understanding, and ethical AI principles. Copilot, provides code generation, document summarization, and email drafting for productivity. Perplexity AI delivers direct answers with sources for research and information retrieval.

Each of these AI tools supports specific parts of the AI workflow. The right choice depends on your feature priorities, integration needs, and preferred interaction style.

Do AI Professionals rank GPT-4o among the best AI models?

No. Initially, AI professionals did rank GPT-4o among the best AI models, recognizing its groundbreaking multimodal capabilities and unified architecture. It was highly praised for its ability to seamlessly process text, audio, and image inputs, setting a new standard for AI interaction and application development.

However, with the rapid advancement in the AI landscape, newer and more specialized models have emerged, often surpassing GPT-4o in specific benchmarks or offering enhanced capabilities. While still a powerful and versatile tool, its position at the absolute forefront has evolved as the field continues to innovate.

Which Features Are Exclusive to GPT-4o?

GPT-4o’s most exclusive features revolve around its unified neural network architecture, which enables seamless, real-time processing and integration of text, audio, and image inputs within a single model. This unified approach allows for unprecedented low-latency responses and natural, real-time voice and visual interactions that differentiate it from models relying on separate components for different modalities.

While other AI models offer multimodal capabilities, GPT-4o’s ability to understand and generate content across these diverse inputs in a truly integrated, real-time fashion, optimized for speed and efficiency, sets a new benchmark.

This architecture facilitates advanced vision and real-time conversational experiences that are highly cohesive and contextually aware, making it uniquely powerful for dynamic applications.

What Is the Difference Between GPT-4o and Other AI Models?

The difference between GPT-4o and other AI models lies primarily in GPT-4o’s unified multimodal architecture, which enables seamless, real-time processing of text, audio, and image inputs within a single model, whereas other models often specialize or use separate components.

GPT-4o is engineered for low-latency, cohesive interactions across modalities, allowing it to interpret spoken language, analyze visual cues, and generate textual responses all within a single, dynamic interaction.

Competitors like Claude excel in complex reasoning and long-context understanding, while Gemini offers robust multimodal capabilities with strong integration across Google’s ecosystem. Copilot focuses on productivity tasks like code generation and document summarization, and Perplexity AI specializes in providing direct answers with sources for research.

While all aim to provide advanced AI, GPT-4o is optimized for integrated, real-time multimodal experiences, making it ideal for dynamic applications. Other models might be preferred for their specific strengths in areas like ethical AI, coding assistance, or detailed research.

Search Atlas goes beyond individual AI models by providing an all-in-one SEO platform that leverages advanced AI for content optimization, technical audits, and keyword tracking, offering a comprehensive solution for digital marketing.

How Does GPT-4o Compare to Gemini or Claude?

GPT-4o, Gemini, and Claude are leading multimodal AI models, each with distinct strengths. GPT-4o stands out for its unified neural network architecture, enabling seamless, real-time processing of text, audio, and image inputs within a single model, optimized for low-latency and cohesive interactions.

Gemini, developed by Google, offers robust multimodal capabilities with deep integration across Google’s extensive ecosystem, making it versatile for various applications. Claude, from Anthropic, is recognized for its advanced complex reasoning, and a strong emphasis on ethical AI principles, making it suitable for tasks requiring nuanced comprehension and safety.

What is the Difference Between GPT-4 vs GPT-4 Turbo vs GPT-4o?

The difference between GPT-4, GPT-4 Turbo, and GPT-4o represents an evolution in OpenAI’s flagship models, primarily concerning speed, context window size, cost-efficiency, and the depth of multimodal integration.

GPT-4 was the initial powerful text-based model, offering advanced reasoning and generation capabilities. GPT-4 Turbo introduced significant improvements in speed, a much larger context window, and reduced pricing, along with enhanced vision capabilities. GPT-4o, the “omni” model, further refines this by integrating text, audio, and image processing into a single, unified neural network, delivering unprecedented low-latency responses and real-time multimodal interactions at an even more competitive price point for API usage.

Each model serves different needs. GPT-4 laid the groundwork for advanced AI, GPT-4 Turbo optimized for more extensive and faster text-centric applications, and GPT-4o is designed for cutting-edge, real-time multimodal applications requiring seamless integration of diverse inputs.

Search Atlas leverages the power of advanced AI models, including the latest iterations, to provide comprehensive content optimization and SEO insights, ensuring users benefit from the most current and effective AI capabilities for their digital strategies.

How GPT-4o Powers LLM Visibility?

GPT-4o significantly enhances LLM Visibility by providing advanced capabilities for understanding and generating content across various modalities, which is crucial for how information is processed and presented by large language models.

Its unified architecture allows for real-time analysis of text, audio, and image inputs, enabling a more comprehensive grasp of context and nuance in user queries and generated responses. This deep multimodal understanding helps LLMs to better interpret user intent and deliver more relevant and accurate information, thereby improving their overall visibility and utility in diverse applications.

Use Search Atlas LLM Visibility tool that provides an enterprise-ready platform to measure brand mentions, sentiment, share of voice, and how you rank inside ChatGPT. It combines automated performance improvements with complete SEO visibility, uniting page speed, technical audits, and keyword tracking in a single platform.

Is GPT-4o available in ChatGPT Plus?

Yes, GPT-4o is available to ChatGPT Plus subscribers. This subscription tier provides enhanced access and higher usage limits for the GPT-4o model compared to the free plan.

Can You Use ChatGPT-4o Offline?

No, you cannot use ChatGPT-4o offline. As a cloud-hosted service, GPT-4o requires a stable and continuous internet connection to function, as all processing and model interactions occur on OpenAI’s servers.