Large language models (LLMs) reference external URLs to ground their answers. URL freshness measures how recently a cited page was published relative to the moment the model produced the response. Freshness reveals whether the model draws from new material or from older content shaped by training data.

SEO and AI researchers debate how much web search access influences the age of cited sources. The missing piece is large-scale evidence that shows how search-enabled and search-disabled systems differ when selecting URLs.

This study examines 150,000 citations from OpenAI, Gemini, and Perplexity. The dataset contains 90,000 citations with search enabled and 60,000 citations with search disabled. Publication dates were extracted for 14,681 URLs to measure freshness with precision across both conditions.

The findings reveal clear patterns. Search-enabled models anchor their answers in recent webpages, often published within a few hundred days of the response. Search-disabled modes restrict the model to internal knowledge and cached references. These patterns show that retrieval access governs the freshness and structure of citations inside LLM responses.

Methodology – How Was URL Freshness Calculated?

This experiment measures how LLMs cite external webpages under different retrieval conditions. The analysis evaluates the age of the URLs that appear in LLM responses and examines how search access changes the freshness of those citations.

URL freshness was calculated by measuring the number of days between the publication date of a cited page and the timestamp of the LLM response that referenced it. This method shows how recently each source was published relative to the moment the model produced the answer.

The dataset integrates 2 primary components listed below.

Web Search Enabled Dataset. Contains 90,000 citations from OpenAI, Gemini, and Perplexity between October 28 and November 6, 2025. Each platform contributed 30,000 citations. Each record includes the cited URL, the response timestamp, and platform identifiers.

Web Search Disabled Dataset. Contains 60,000 citations from OpenAI and Gemini between October 1 and October 19, 2025. Each platform contributed 30,000 citations with search disabled. Perplexity does not offer a disabled-search mode, so it does not appear in this dataset.

These datasets provide 150,000 citations for freshness measurement across both conditions.

Publication dates were required for every evaluable URL. Homepage links were removed because they do not contain publication metadata. Each remaining URL was scanned for trusted fields (published_time, og:published_time, datePublished). Valid timestamps were recorded. This process produced 14,681 publication dates.

Freshness measures the time between the publication of a page and the LLM response that cited it. Freshness was calculated by subtracting the publication date from the response timestamp. The result reflects how recent the cited source was at the time of generation.

The analytical steps are listed below.

1. Review freshness distributions across all platforms.

2. Compare freshness between search-enabled and search-disabled responses.

3. Examine platform-level differences in citation age.

Only responses generated in 2025 were included to preserve temporal consistency. URLs published at any earlier time were eligible.

This framework creates a structured view of citation recency and shows how search access reshapes the age profile of URLs in LLM responses.

What Is the Final Takeaway?

The analysis shows that web search access offers a direct impact on the freshness and structure of LLM citations. Enabled mode pulls recently published pages into answers, while disabled mode limits models to older material rooted in their training data. This contrast reveals how each system balances live information with internal knowledge.

Perplexity demonstrates the strongest retrieval behavior. Perplexity reliance on constant search produces a steady mix of fresh and mid-aged sources, which reflects continuous access to live webpages.

Gemini produces the freshest citations when search is enabled, but its performance changes sharply without retrieval. Disabled mode pushes Gemini toward homepage-level URLs and older content, which indicates heavy dependence on pretraining.

OpenAI shows the most resilience across both conditions. OpenAI continues to surface relatively recent, article-level URLs even without active search, which suggests broader internal coverage.

Freshness patterns remain consistent across conditions. Search-enabled systems cite pages published within a few hundred days of the response, while disabled systems shift toward older material.

- Gemini is the most recent-focused on retrieval.

- Perplexity maintains strong recency through breadth.

- OpenAI spans the widest publication range and produces the largest share of older sources.

These findings confirm that retrieval governs citation recency, specificity, and depth inside LLM responses. Search access increases freshness, while disabled modes reveal how each model defaults to internal knowledge. Freshness becomes a clear indicator of how LLMs source information and how their behavior changes once retrieval is present or removed.

How Do LLMs Behave When Web Search Is Enabled?

I, Manick Bhan, together with the Search Atlas research team, analyzed 90,000 citations generated with web search enabled to measure how retrieval changes the structure and freshness of URLs in LLM responses.

The breakdown to show how OpenAI, Gemini, and Perplexity behave when search is enabled is listed below.

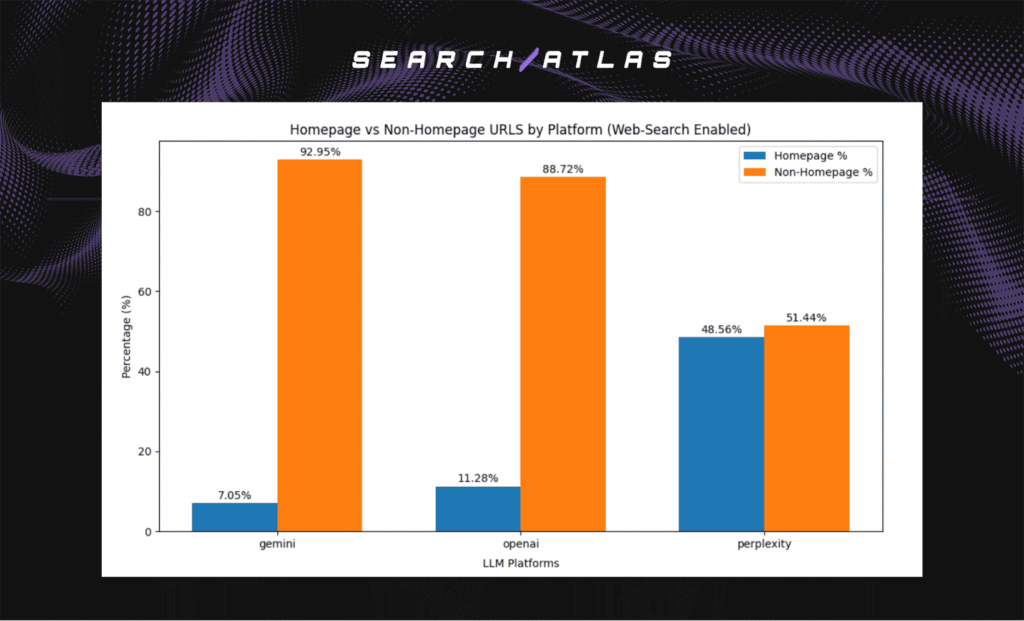

Homepage vs Non-Homepage Citations

This analysis measures how often each model cites real article pages rather than top-level domains. Article pages matter because they contain publication metadata, which allows freshness to be calculated. Homepage URLs rarely expose timestamps, which limits freshness evaluation.

The headline results are shown below.

- Gemini. 92.95%non-homepage URLs.

- OpenAI. 88.72% non-homepage URLs.

- Perplexity. 51.44% non-homepage URLs and 48.56% homepage URLs.

Gemini and OpenAI consistently cite specific content pages instead of general domain homepages, which enables stronger freshness analysis. Perplexity displays the most balanced split, which reflects a broader and more varied retrieval pattern.

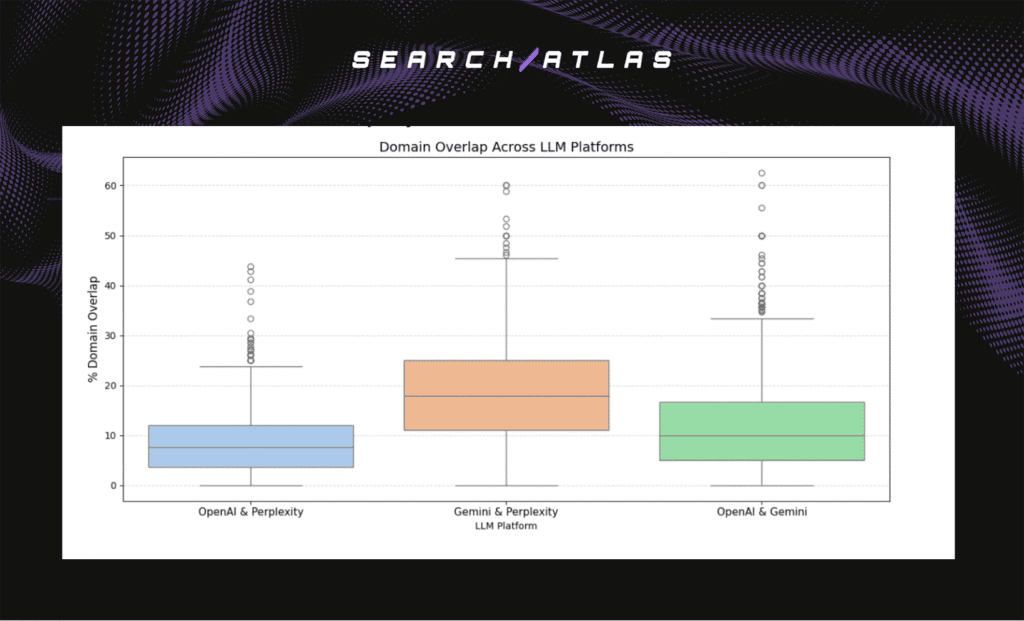

Domain Overlap Across Platforms

This analysis measures how often the models cite the same domains for the same query. Domain overlap matters because it shows whether the systems retrieve similar sources when search is enabled or whether they diverge and construct distinct citation patterns.

The headline results are shown below.

- OpenAI and Perplexity. Median overlap is around 5% to 12%.

- Gemini and Perplexity. Widest range, spanning 0% to 60%.

- OpenAI and Gemini. Consistently low overlap, around 5% to 15%.

All model pairs display low domain overlap, which confirms that they retrieve largely different sources even under identical retrieval conditions.

- OpenAI and Perplexity diverge the most, showing minimal shared domains.

- Gemini and Perplexity achieve the highest potential overlap, but with extreme variation across queries.

- OpenAI and Gemini show stable, low alignment, indicating that they rarely cite the same domains.

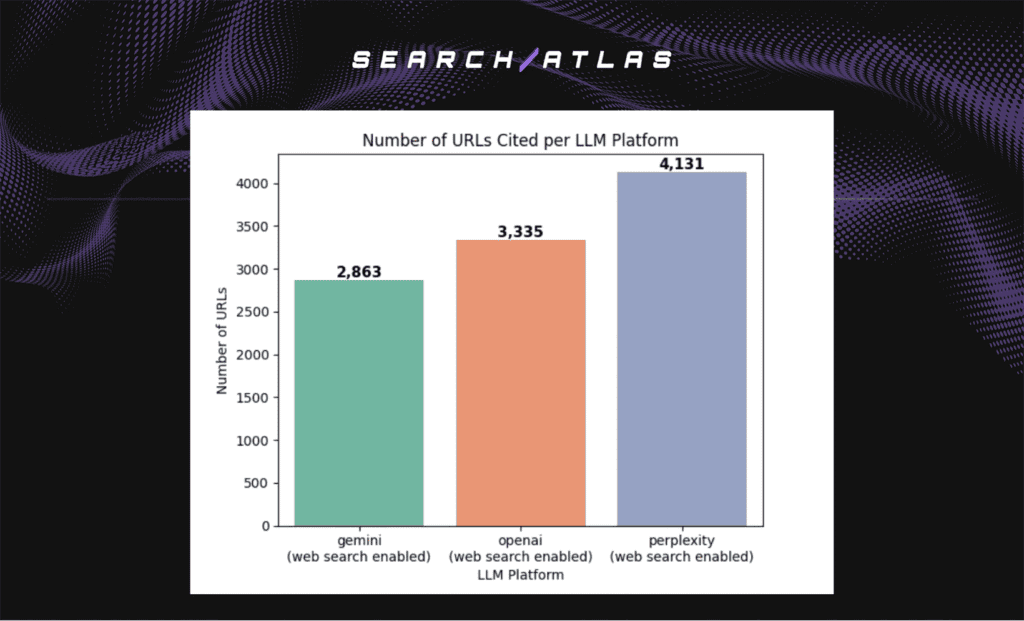

Extracted Publication Dates per Platform

Extractable publication dates show how often each model surfaces article-level URLs that contain valid metadata. This determines how much freshness is evaluated for each platform.

This analysis measures how often each model returns article-level URLs with valid publication metadata. The headline results are shown below.

- Perplexity. 4,131 URLs with publication timestamps.

- OpenAI. 3,335 URLs with publication timestamps.

- Gemini. 2,863 URLs with publication timestamps.

Perplexity is the most citation-dense model because it produces many article pages despite shorter responses. OpenAI retrieves broadly and returns many URLs with valid timestamps. Gemini generates longer outputs and fewer citations, which reduces the number of extractable dates even though it cites non-homepage URLs at a high rate.

Normalized Sampling for Fair Cross-Platform Comparison

The analysis requires equal sample sizes to compare freshness patterns fairly across platforms.

The headline result is shown below.

The smallest platform, Gemini, with 2,863 extractable timestamps, established the baseline. A random sample of 2,863 URLs was selected from OpenAI and Perplexity to match this count.

This normalization ensures fair cross-platform comparison. Each model contributes the same volume of publication-dated URLs, which prevents citation density differences from influencing freshness distributions.

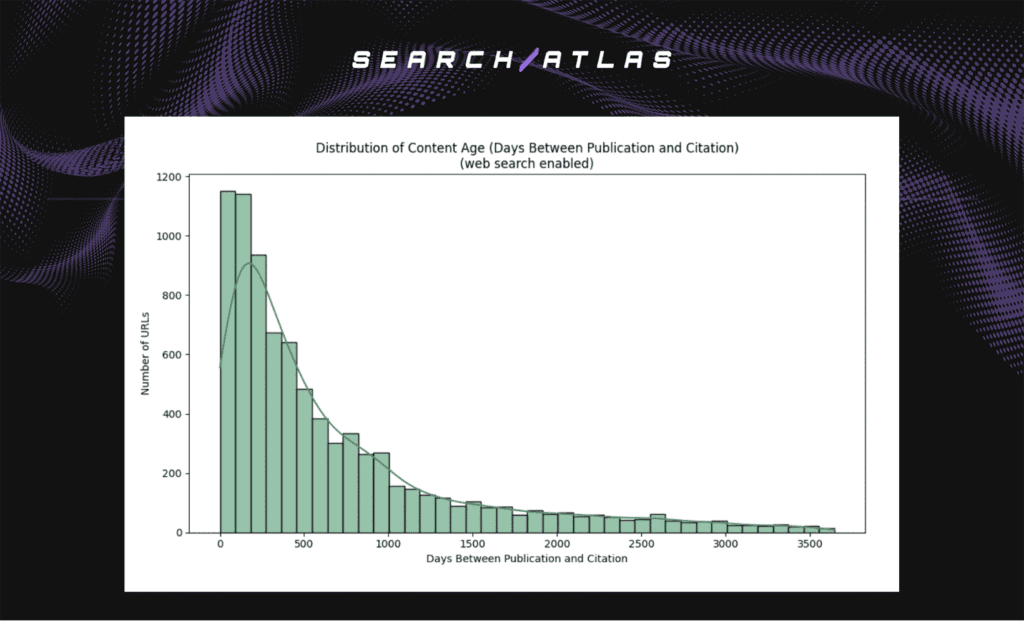

Distribution of Content Age (All LLMs)

This analysis measures how recently cited pages were published relative to the model response. The distribution covers the last 10 years of publication dates.

The headline results are shown below.

- Most cited URLs fall within the first few hundred days of publication.

- Citation activity peaks between 0 and 300 days.

- A smaller but meaningful tail extends into older sources.

The distribution is strongly right-skewed. A large share of URLs have freshness values near zero, which shows that many pages were published only weeks or months before citation. The curve then tapers into a long tail, which confirms that while recent content dominates, older material still appears when relevant.

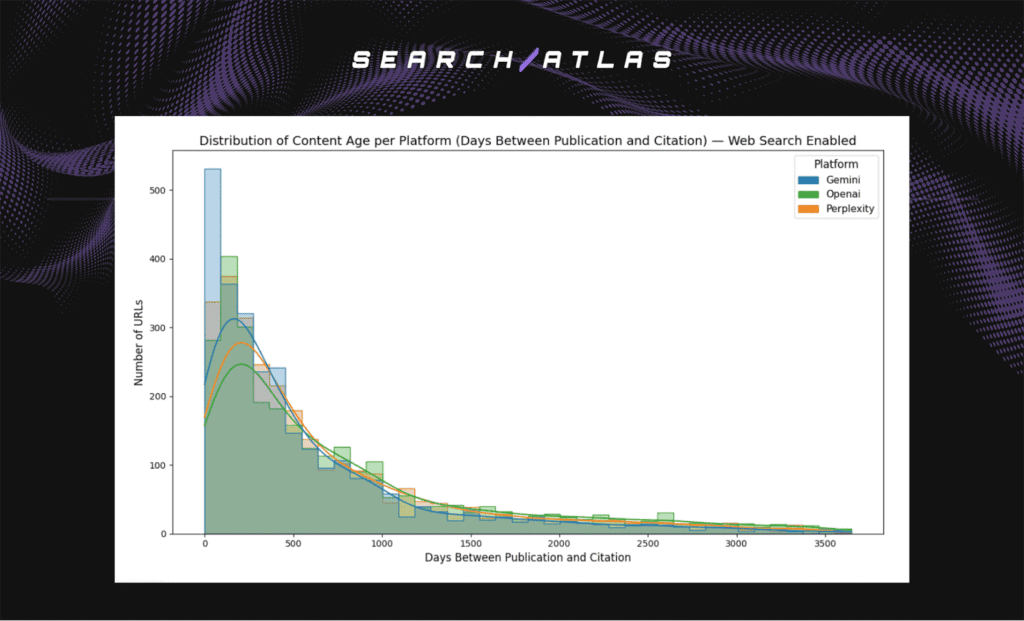

Distribution of Content Age (Per LLM Platform)

This analysis measures how recently each platform tends to cite published pages when search is enabled. The distribution spans the last 10 years of publication dates.

The headline results are shown below.

- All three models show a right-skewed age distribution. Most citations fall within the first 300 days after publication.

- Gemini demonstrates the strongest recency preference. Its density is highest near zero days, with a sharp decline as the content ages.

- Perplexity shows a balanced pattern. It cites many fresh URLs but extends into older material more often than Gemini.

- OpenAI cites the widest age range. Its distribution contains more older pages, which produces the heaviest long tail among the three models.

Gemini retrieves the freshest content overall. Perplexity maintains a balanced mix of recent and moderately old sources. OpenAI surfaces the broadest span of publication ages and the largest share of older URLs while still retrieving a meaningful volume of recent pages.

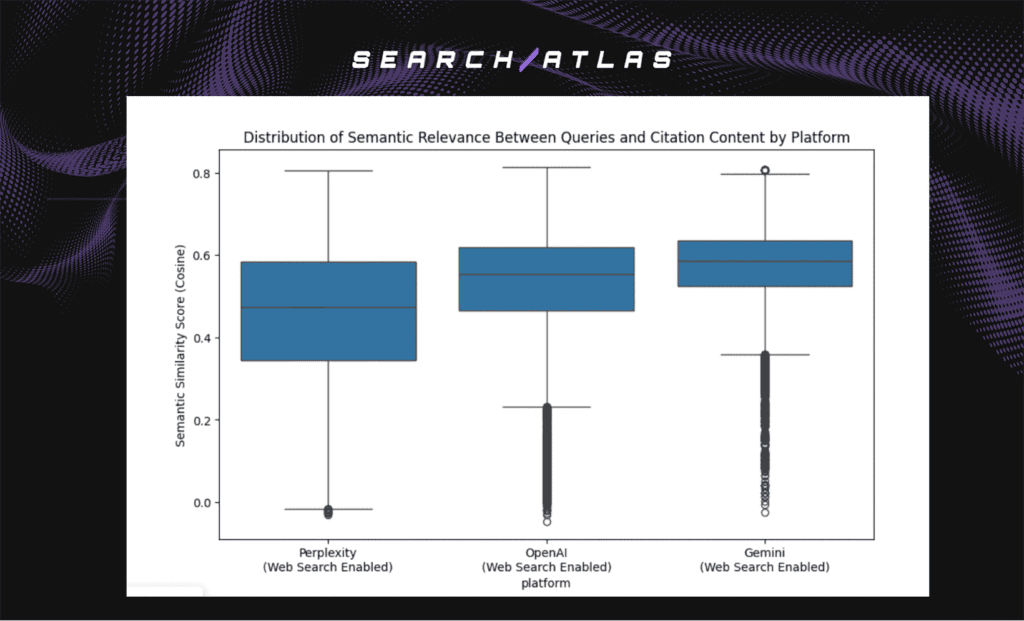

Semantic Relationship Between Queries and Cited URL Content

This analysis measures how well each cited URL matches the meaning of the user query. Strong semantic alignment means the model retrieves pages that directly answer the question. Weak alignment means the model retrieves pages that relate only loosely to the topic.

The headline results are shown below.

- Gemini median similarity. ≈0.57 to 0.60

- OpenAI median similarity. ≈0.52 to 0.56

- Perplexity median similarity. ≈0.45 to 0.48

Gemini shows the strongest and most consistent match between the query and the cited page. OpenAI follows with stable and reliable alignment. Perplexity shows the widest spread, retrieving some highly relevant pages but many with a weaker connection to the query.

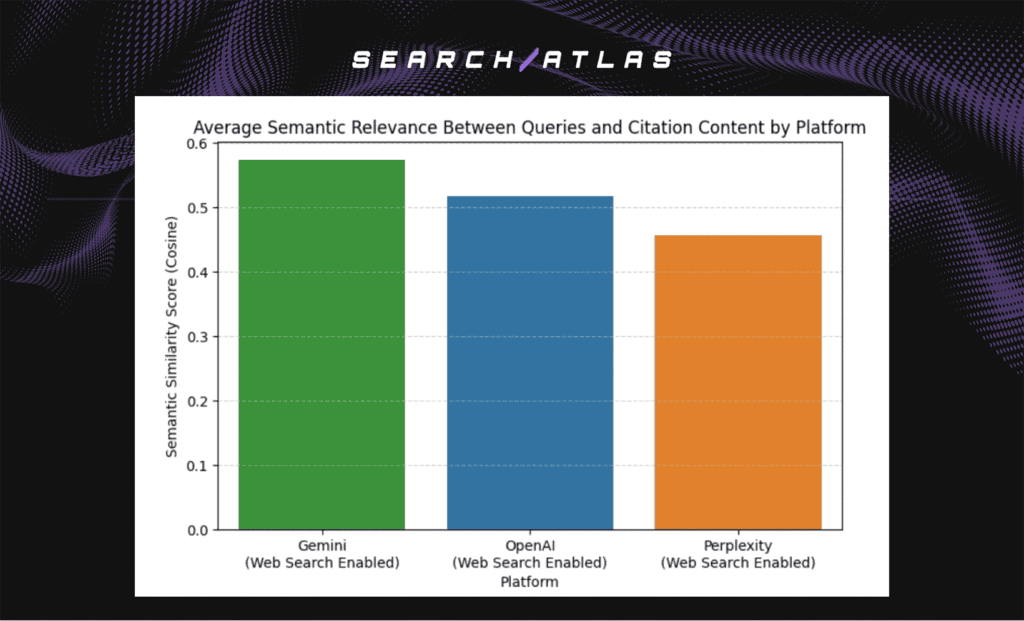

Average Semantic Similarity Across Platforms

The average semantic similarity across all queries highlights how each model’s design shapes the quality of its citations. The headline results are shown below.

- Gemini average similarity. Highest and most consistent across queries

- OpenAI average similarity. Strong and stable performance

- Perplexity average similarity. Lowest with the greatest variability

Gemini maintains the strongest overall alignment, which confirms that it retrieves content that closely reflects query intent. OpenAI performs well and stays consistent across queries. Perplexity shows the lowest averages, which indicates unpredictable relevance between the question and the cited content.

How Do LLMs Behave When Web Search Is Disabled?

I, Manick Bhan, together with the Search Atlas research team, analyzed 60,000 citations generated with web search disabled to measure how the absence of retrieval changes URL structure and freshness. Perplexity does not offer a disabled-search mode, so the comparison focuses on OpenAI and Gemini.

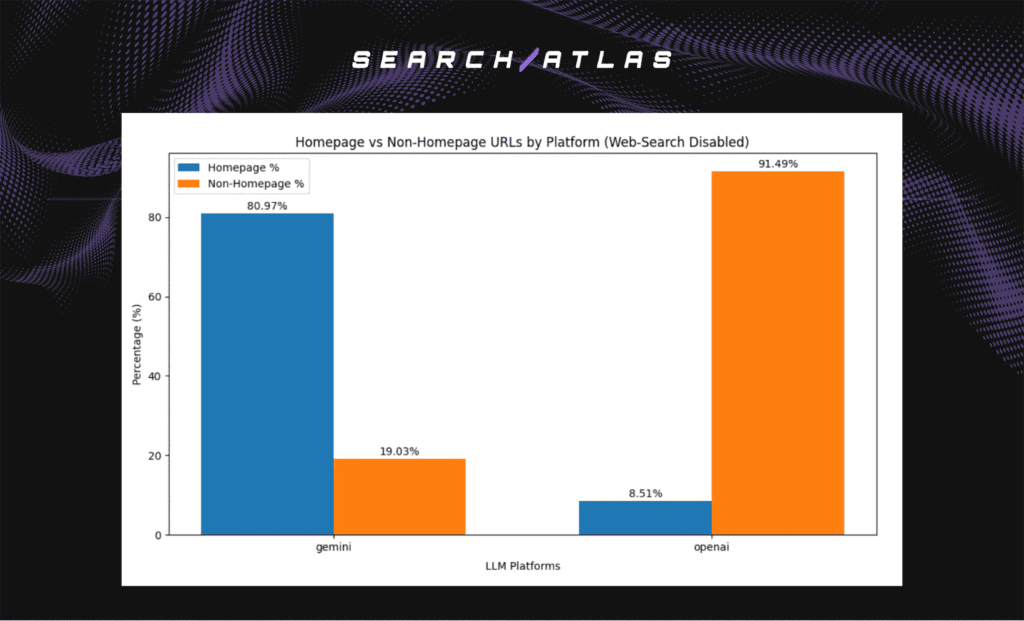

Homepage vs Non-Homepage / Content URLs

This analysis measures how often each model cites real article pages when retrieval is unavailable. Article pages matter because they contain publication metadata. Homepage URLs do not provide this information.

The headline results are shown below.

- Gemini returns homepage URLs in most responses. It rarely cites article-level pages without search access.

- OpenAI cites article-level URLs in over 91% of responses. It continues to surface real content pages even without retrieval.

- Gemini falls back to top-level domains when retrieval is disabled. This shift prevents publication dates from being extracted and limits freshness measurement.

Gemini moves almost entirely toward root domains in disabled mode, which shows heavy dependence on active retrieval. OpenAI maintains content-level citation behavior, which allows freshness to be evaluated even when search is unavailable.

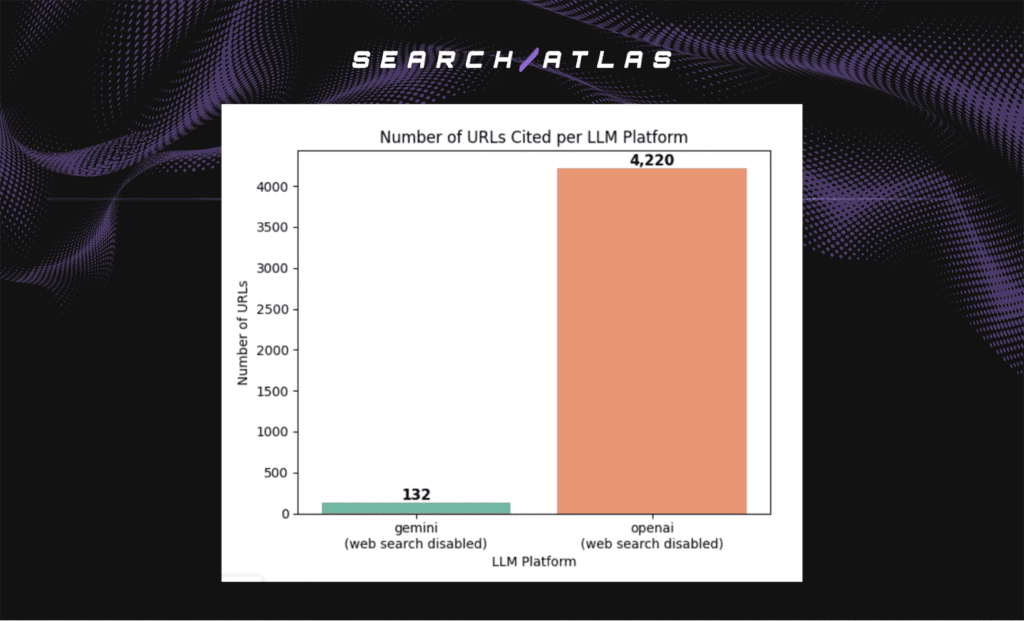

Extractable Publication Dates

This analysis measures how often each model references article-level URLs with valid publication metadata when retrieval is unavailable. Extractable timestamps matter because they determine how much freshness is measured in disabled-search conditions.

The headline results are shown below.

- OpenAI produced 4,220 URLs with extractable publication dates.

- Gemini produced 132 URLs with extractable publication dates.

OpenAI continues to surface real content pages without retrieval, which generates thousands of URLs containing clean publication metadata.

Gemini shows a sharp drop in extractable timestamps. Only 5,710 of its 30,000 samples contain non-homepage URLs, and strict extraction rules identify valid dates for only 132 of them. This occurs because Gemini cites homepages in most responses, and those pages do not provide usable publication fields.

These patterns show that web search access increases the number of article-level URLs with verified timestamps. Gemini is the most constrained model when retrieval is disabled.

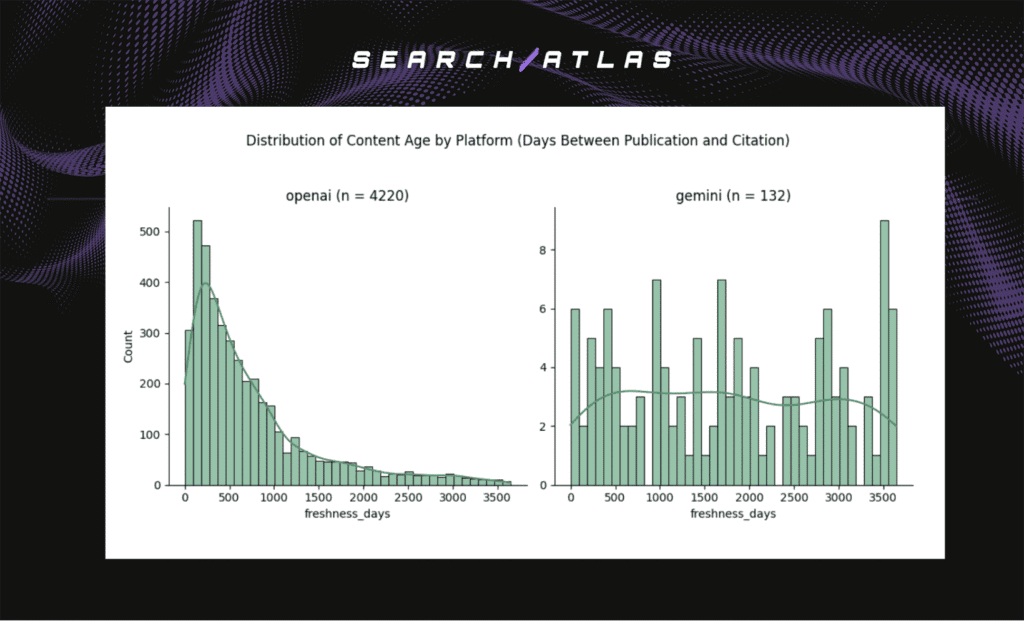

Distribution of Content Age

This distribution measures how recently pages were published relative to the response when search is disabled. The comparison shows whether each model continues to reference fresh material or defaults to older sources without retrieval.

The headline results are shown below.

- Gemini cites far older material, centered between 2,500 and 3,500 days.

- OpenAI cites content concentrated between 0 and roughly 1,200 days, with a strong peak under 500 days.

OpenAI continues to reference relatively recent article-level content in disabled-search mode. Its citations cluster within the last 3 to 4 years, with many pages falling under 500 days.

Gemini shifts almost entirely toward long-aged sources without retrieval. Most cited pages are between 7 and 10 years old, which reflects heavy dependence on older information learned during pretraining.

This divergence aligns with homepage behavior.

OpenAI contributes 4,220 URLs with valid publication dates, while Gemini produces only 132 because most of its citations point to homepages. The absence of retrieval exposes clear differences in how each model sources information when fresh content is unavailable.

Which LLM Model Produces the Freshest Citations?

The 3 leading models (Perplexity, OpenAI, Gemini) were analyzed to determine which system cites the most recently published webpages. The comparison measures publication-date freshness, article-level URL behavior, and reliance on search access to show how each model sources information under search-enabled and search-disabled conditions.

The breakdown showing which model produces the freshest citations is outlined below.

Gemini: Highest Freshness With Retrieval

Gemini produces the freshest citations when web search is enabled. Gemini responses concentrate near zero-day freshness, often citing pages published only weeks or months before the model generated the answer. This pattern confirms that Gemini leverages retrieval aggressively to surface recent, article-level content.

The Gemini freshness distribution drops sharply as publication age increases, which demonstrates a strong preference for new information. When search is enabled, Gemini behaves like a recency-optimized retrieval system designed to anchor answers in current material.

Perplexity: Balanced Freshness From Continuous Retrieval

Perplexity cites a balanced mix of fresh and moderately aged URLs. Perplexity retrieves a large volume of article-level pages and maintains a strong recency signal, though not as concentrated as Gemini.

The Perplexity distribution extends into older material more frequently, which reflects its broad retrieval style. The pattern shows that Perplexity behaves like a high-coverage search model that blends fresh sources with deeper archival pages while maintaining consistent access to current information.

OpenAI (GPT): Resilience Freshness Even Without Search

OpenAI demonstrates strong resilience in freshness behavior. With search enabled, OpenAI retrieves many recently published pages, though with a wider age range than Gemini or Perplexity.

When search is disabled, OpenAI continues to cite moderately recent articles at a high rate. It produces thousands of extractable publication dates, which confirms that GPT retrieves structured, content-rich URLs even without live-web assistance.

OpenAI pattern reveals a model anchored in article-level content regardless of retrieval mode, which maintains meaningful recency through internal knowledge and link-based reasoning.

What Should SEO and AI Teams Do?

SEO and AI teams need to treat URL freshness as a core visibility signal. Fresh citations inside LLM responses show whether a brand’s content remains present and relevant in AI-generated answers.

Teams need to optimize content for query-level relevance. Pages with clear topical focus, verified publication dates, and strong semantic alignment are cited more frequently across search-enabled systems. Structured metadata and recent updates increase the likelihood that models classify a page as current and contextually correct.

Tracking freshness across platforms reveals how often newer pages enter the citation stream and how they compete against older sources.

- Gemini shows the strongest recency bias when the search is active.

- OpenAI maintains fresh citations even when search is disabled.

- Perplexity cites frequently but draws from a broader and less consistent range of publication ages.

These patterns influence which brands appear in AI answers and how their content is positioned.

Strategic planning must account for these differences. Align content updates, metadata structure, and publication velocity with platform-level freshness trends to secure visibility across emerging AI discovery channels.

Brands that publish consistently, maintain clean article metadata, and reinforce topical clarity achieve the strongest presence in search-enabled LLM environments.

What Are the Limitations of Study?

Every dataset and retrieval condition introduces constraints. The limitations of this study are listed below.

- Homepage Bias in Disabled Mode. Gemini returns a high volume of homepage URLs when search is disabled, which reduces the number of extractable publication dates and limits freshness comparisons.

- Uneven Extractable Date Coverage. Only 14,681 URLs across both datasets contained valid publication metadata. Models that cite fewer article-level pages produce smaller comparable samples.

- Temporal Scope. The dataset spans October 1 to November 6, 2025. The timeframe provides a focused view of retrieval behavior but does not capture longer-term stability or seasonal variation.

- Publication Date Reliability. Even with strict extraction rules, metadata fields vary across websites. Some pages provide incomplete or ambiguous timestamps that reduce the total evaluable samples.

Despite these limits, the analysis establishes a clear baseline for understanding how retrieval access affects citation freshness. The findings reveal consistent behavioral differences across models and provide a foundation for broader longitudinal research on LLM citation dynamics.